Help, I'm being attacked by shopping bots!

What's happening?

Sometimes, we have stores report to us that Recapture is logging an abnormally high amount of visitor carts on the Live Cart Feed. Most of which are logged within seconds of each other, during a fixed number of hours in the day.

We observed the data on a few of those sites and concluded that these are not carts abandoned by actual visitors. So where did these carts come from?

Bots. Your store is being visited by bots. Sometimes this bot is friendly (Hello, Google Shopping bot!) and sometimes not.

Why do bots crawl my store?

Web crawling started as mapping the Internet and how each website was connected to each other. It was also used by search engines in order to discover and index new online pages. Web crawlers were also used to test a website’s vulnerability by testing a website and analyzing if any issue was spotted.

Friendly bots are crawling your site to discover your products and see what's available. They use this information to aggregate shopping data around the internet. Less friendly bots might be poking away at known vulnerabilities on your store.

How does crawling work?

In order to crawl the web or a website, robots have to start somewhere. Robots need to know that your website exists so they can come and have a look at it. Back in the days you would have submitted your website to search engines in order to tell them your website was online. Now you can easily build a few links to your website and Voilà you are in the loop!

Once a crawler lands on your website it analyzes all your page content and follows each of the links you have whether they are internal or external. It does so until it hits a page with no links, or encounters an error like 404, 403, 500, 503.

Because Recapture is tracking all carts on your store, if a bot creates a cart by visiting the proper page to add an item, we'll end up tracking it. But these carts rarely look "normal". They may be filled with incredibly high quantities (*like 10,000,000 of one item). Or one of every item on your store. Basically, they look like no human ever created them.

How do I stop it from happening?

There are a few solutions you can employ on your store, depending on your platform. We recommend doing as many of them as you're able to use, as it will stop bad and good bots both.

Solution 1: (All)

Use Recapture's cart exclusion feature to automatically delete "weird" carts. You can delete by product or you can delete by amount. Set this under Cart Settings, which you can access here: https://app.recapture.io/account/abandoned-carts/settings.

Solution 2: (Shopify)

Enable their bot protection for your store: https://help.shopify.com/en/manual/checkout-settings/bot-protection.

Solution 3: Use robots.txt (where you have access to it)

Good robots mostly do what they're told, and you can control their behavior on your store using a file called robots.txt. It tells crawlers what pages they are allowed to access on your site.

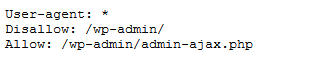

When you create any website, you usually have an existing robots.txt file. For WordPress, this is located in your server’s main folder. For example, if your site is located at yourfakewebsite.com, you should be able to visit the address yourfakewebsite.com/robots.txt and see a file like this come up:

For WordPress sites, you can open the file in Edit mode and add these lines of text:

User-agent: * Disallow: /*add-to-cart=*

This says that all the bots need to the follow the rules listed in the rest of the file. If you store has an URL for /add-to-cart, then the add-to-cart links are not allowed for this robot and should not be crawled.

We strongly recommend that you also stop the Cart, Checkout and My Account pages from being crawled. This can be achieved by adding the below lines for WooCommerce:

Disallow: /cart/ Disallow: /checkout/ Disallow: /my-account/

Your setup for EDD or Shopify may vary from that a bit.

This allows the crawlers to index your site but it stops them from crawling the add to cart links and creating unnecessary visitor carts.

Once such carts are stopped from being logged, It becomes easier for you to analyze your abandoned cart data in your Analytics page.

For Shopify, to edit this file, you must use the robots.txt Liquid Template, which is explained in detail in Shopify's Liquid documentation.

Solution 4: Use Cloudflare (or similar) to block bad actors

Cloudflare is a proxy service that helps to block bad traffic and prevent denial of service (DDoS) attacks on your site. Cloudflare is a useful and powerful service that can protect your site from folks that have less than good intentions. It's especially important to help prevent bad bot attacks.

MAKE SURE YOU USE THEIR FREE PLAN--it's not obvious when you're trying to sign up (they hide it very well), but it's at the bottom. That's all you need to get this protection.

Most of our supported platforms can be easily used with Cloudflare: Magento, Shopify and WordPress sites are easily protected using Cloudflare. Here are the articles that will walk you through it:

Cloudflare does offer a FREE plan, although it's very hard to find. Check at the bottom of their pricing page when you're signing up (and don't be scared by the high prices because you don't need their paid plans to get the bot protection from their proxy services).

Solution 5: (WordPress) Use a Plugin to Block Bots

Solution 6: (Advanced) Edit your .htaccess File Directly

Details in this article here: https://www.seoblog.com/block-bots-spiders-htaccess/